|

| TI's TCI6636 integrates 4 ARM A15 cores with 8 C66x DSPs, for a complete LTE/LTE-Advanced base station on a chip |

Texas Instruments (TI) has introduced the next generation of their multicore system on a chip (SOC) architecture, Keystone II, at the Mobile World Congress this week. The company also announced the first device that employs the new architecture, the TCI6636 base station on a chip. Tom Flanagan, Director of Technical Strategy for Wireless Base Station Infrastructure at TI, says that in the Keystone II, TI has upgraded the underlying silicon technology from 40nm to 28nm. The company had developed twelve 40nm Keystone chips in just 18 months, he said, each with ~1B transistors. With the new process node, TI has increased transistor density to be able to integrate 2-3B transistors.

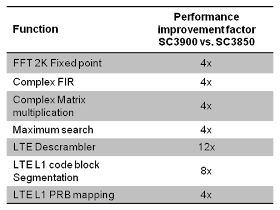

TI designed the TCI6636 to be used in the high-end of the small cell market segment, or to be grouped together to make a macro cell while lowering cost and power requirements. In the TCI6636, TI has integrated ARM's latest A15 cores, but with their own proprietary cache coherency system. TI's Keystone II memory system increases support from 8 cores in the previous Keystone architecture, to up to 32 cores in the new architecture. Flanagan says that TI's strategy is to use standard cores from ARM, and to implement their own enhancements around the cores, in order to retain the full benefits of the ARM ecosystem. The TI designs can still achieve up to 4X performance improvement over competitors,according to Flanagan.

In the TCI6636, TI adds accelerators to the base Keystone architecture for wireless infrastructure applications, with integrated switch functions in the I/O blocks. The new SOC has eight C66x DSP cores, each with a dedicated 1MB memory subsystem. The general purpose processing is performed by a quad-core configuration of 4 ARM A15s, sharing 4MB of memory. An additional 6MB of memory is shared across the 12 DSP + CPU cores, for a total of 18MB of on-chip memory. Along with large on-chip memory, TI has added dual, 72b DDR3 interfaces for external memory. Flanagan says that this "beast" of a fast memory sub-system accounts for much of the performance increase in the TI6636.

In regards to the processor performance, Flanagan says that the 4 ARM A15s cumulatively provide 17.5 Dhrystone MIPS (Million Instructions Per Second), while the 8 DSP cores achieve 320 billion multiply accumulates per second (GMACS), and 160 billion floating-point operations per second (GFLOPS). Flanagan said that TI worked closely with ARM on development of the A15 core, and the TI6636 is the first implementation of a quad configuration, and the first where the cores are operated at higher than a 1GHz clock rate.

With the introduction of the TI6636, TI now has a range of three base station on a chip SOCs, from the 40nm ARM8-based TMS320TCI6612 and TMS320TCI6614 that the company introduced at the Femtocell World Summit in London last year, to the new 28nm ARM A15-based TCI6636:

- TCI6612 supports Cat 1–5 LTE (max. of 300Mbps downlink, 75Mbps uplink) and HSPA+, and can be operated in dual (4G/3G) with up to 64 users.

- TCI6614 adds advanced receiver algorithms, and more sophisticated schedulers, for simultaneous dual-mode support of up to 128 users.

- TCI6636 adds to the 6614 capability with Cat-7 LTE support (300Mbps downlink, 150Mbps uplink), and LTE-Advanced support for a 40 MHz bandwidth with carrier aggregation, and up to 256 users.

|

| Three TCI6636 SOCs can be grouped together to form a macro cell base station |

Flanagan outlined how features built in to the TCI6636 can lower the cost and power of a conventional macro cell architecture. By connecting three of the devices with TI's hyperlink bus, the network processor for system control and packet processing can be eliminated at a savings of ~30 watts, saving $50 of cost. He estimates that elimination of a separate ethernet switch saves 10 watts and $200, while the built-in Serial Rapid IO (SRIO ) switch saves 10 watts and $125. The multi-antenna interface in the TCI6636, which is typically implemented in an FPGA, can save 25 watts of power and provides a cost saving of $250, according to Flanagan's calculations. The bottom line, he says, is a cumulative savings of 75 watts and a cost reduction of $625. Further power savings are possible, by shutting down one of the SOCs in base stations where full capacity is not required in off-peak hours.

TI expects to begin sampling the TCI6636 SoC in the second half of 2012.

Related articles